Data has become a valuable commodity in our current digital landscape. Data is lucrative, and businesses rely on it to make important decisions. With more and more people looking at data collection, tools have become available to make the process significantly easier. Web scraping is one of these processes, but how does it work, and is it really beneficial?

In this article, we look at what exactly data harvesting is and its benefits for businesses and individuals. We’ll also take a look at how you can get started with web scraping and why you should consider using datacenter proxies proxies from a reliable provider like

Smartproxy for this process.

What Is Web Scraping?

Web scraping is an automated data collection process. These tools have been specifically developed to collect information across multiple websites. They then parse the HTML data that’s been collected in order to deliver it in a readable format. The format you receive the data in is usually a spreadsheet or similar, which makes it easy to draw valuable insights from it.

Data harvesting public data is legal. Public data is any information that’s accessible by the public without having to log in or enter specific credentials to gain access. However, there are some ethical issues to be aware of when you use data harvesting tools.

One of the ethical concerns of data harvesting is how the data is used. You should never pass off any of the data you collect as your own. You’re free to use it to influence decisions for your business, but the data isn’t your property.

Next, you should try to stay away from collecting personal information, as this can go against privacy rights. You can collect public profile information if you’re looking for influencers to work with your brand, but other than that, don’t collect personal information through web scraping.

Also, when you use data harvesting tools, make sure that you don’t overwhelm the source websites with multiple requests. This can seriously affect the user experience for other visitors and have negative implications for the business. As such, make sure that you space out your scraping requests and try to schedule them for times when the site is less active.

How Can You Use Web Scraping?

Web scraping can be used in many different ways for various situations. As an individual, you can use data harvesting to collect the prices of homes in your area to get an idea of property values. Or you can search for all the rental listings in your desired location. You can also use data harvesting to collect the prices of specific products so that you can get the best deal possible.

As a business, you can use web scraping to help you collect product prices and descriptions from your competitors to ensure your prices are competitive. You can use it to do market research on new markets you want to expand to or to gain insight into new product releases. You can use data harvesting to improve your search rankings and SEO. You can even use data harvesting to find social media influencers to promote your brand while also monitoring customer sentiment.

Benefits of Web Scraping

As you can see, there are many different ways that you can use web scraping to benefit your business or personal life. Some of the most important benefits of this data collection method include:

- Automating data collection processes

- Gaining business intelligence and insights

- Collecting unique and rich datasets

- Collecting data from websites that don’t have public API

- Improving data management

How To Start Web Scraping?

It’s easy enough to start using web scraping as a data collection process. For one, there are many pre-built web scrapers available that you can use if you don’t have coding experience. These tools are easy to set up and start using immediately, and the developers update them and provide support when you get stuck.

However, if you know to code and want to create a customized tool for your needs, there are numerous open-source libraries available to help you get started with the code. While Python is one of the most popular coding languages for building web scrapers, you can also use other languages, such as JavaScript and Ruby.

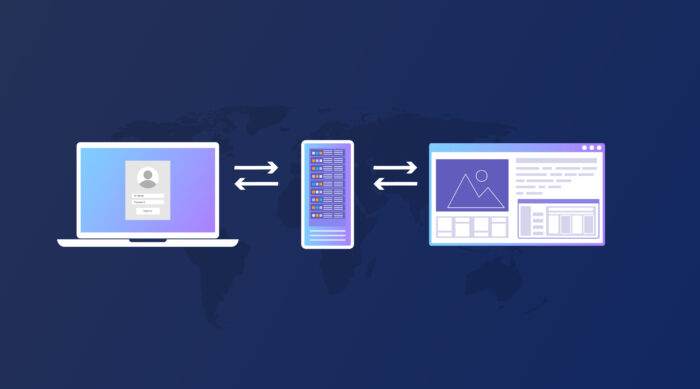

Why Use Datacenter Proxies?

The utilization of datacenter proxies is pivotal for effective and seamless web scraping. These proxies act as a mask, concealing your IP address and providing you with a new one, hence enabling you to conduct your data collection discreetly and efficiently. The following are some compelling reasons to integrate datacenter proxies into your web scraping endeavours:

- Enhanced Anonymity: Datacenter proxies provide a high level of anonymity by hiding your IP address while you’re scraping data from websites. This is crucial for avoiding detection and bans from the websites you are extracting data from.

- Overcome Geo-Restrictions: Datacenter proxies allow you to access and scrape data from websites that may be restricted in certain geographical locations. By offering IP addresses from various locations, they provide the ability to obtain a more diversified and comprehensive dataset.

- Higher Speed: Datacenter proxies generally offer higher speed and more stable connectivity compared to residential proxies. This ensures efficient and timely data extraction, which is essential for businesses seeking up-to-date and relevant information.

- Avoid IP Bans: Continual web scraping activities from the same IP address can lead to it being blacklisted by websites. Datacenter proxies mitigate this risk by allowing you to alternate IP addresses, ensuring uninterrupted data extraction.

- Reliability and Support: Reputable providers of datacenter proxies, like Smartproxy, ensure a high level of reliability and customer support. This ensures that your web scraping activities proceed without any hitches and that any issues are promptly resolved.

Do You Need Datacenter proxies for Web Scraping?

If you want to start web scraping, there are a few tools you need. First, and most obviously, you’ll need a reputable web scraper. Next, you’ll also need datacenter proxies to provide you with a different IP address. This means that websites won’t be able to track your data harvesting efforts, which can help overcome IP blocks due to your scraping efforts. Some websites might block your web scraper, and when they do, you can use datacenter proxies to choose a new IP and continue your data collection process.

Final Thoughts

Web scraping is a valuable and automated data collection process. With this tool, you can quickly collect vast amounts of information across many websites. For the best results, make sure to pair your web scraper with datacenter proxies to avoid getting banned and continue to collect all the information you need.